Enhance Precision With Retrieval Augment Generation (RAG)

- Jia Min Woon

- Artificial Intelligence, Weave

What is RAG?

Retrieval-augmented generation (RAG) is a method in natural language processing that combines retrieval-based and generation-based models. It aims to improve the quality of generated text by integrating relevant information from external sources. RAG has shown promise in tasks like question answering, summarization, and dialogue generation by leveraging retrieved knowledge to enhance coherence, specificity, and factual accuracy.

How does RAG work?

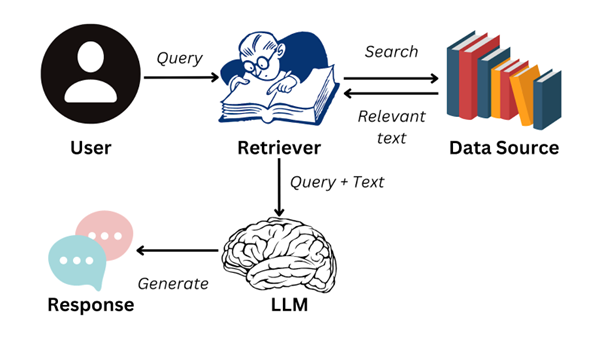

- Retrieval Component: This part retrieves relevant passages or documents from a large text collection based on the input query or prompt. These retrieved documents act as a knowledge base for generating responses.

- Generation Component: A generative model, often like GPT, uses the retrieved passages as additional input to produce a response. By including this retrieved information, the generated text is expected to be more informed and contextually relevant.

- Integration: The retrieved passages are merged into the generative process through methods like attention mechanisms or combining them with the input text. This ensures that the generated response is based on the retrieved knowledge. Learn more about how RAG works.

How RAG works | Image Source: Datacamp (2024)

To put it simply, the user will send a prompt and the retrieval component will search the external data source. After retrieving relevant information from the data source, the retrieval component will send the prompt and information to the Large Language Model (LLM), letting the LLM generate an accurate response for the user.

Why should you apply RAG?

- Enhanced relevance: RAG enhances the relevance of generated text by incorporating external knowledge sources. This ensures that responses are grounded in factual accuracy and context.

- Improved coherence: RAG improves the coherence of generated content. By integrating retrieved passages into the generative process, RAG maintains consistency and relevance, resulting in more fluent responses.

- Increased factual accuracy: By combining retrieval-based and generation-based approaches, RAG excels in tasks like question answering, summarization, and dialogue generation, offering a versatile solution for leveraging external knowledge to improve text quality.

How do you utilize RAG?

Using RAG is straightforward and beneficial. Start by asking simple, specific questions and providing context to help the system understand your needs better. If the initial response isn’t right, refine your queries and trust that RAG systems pull information from multiple sources for a balanced view. Use RAG to summarize long documents or articles and ask for examples or detailed explanations to enhance your understanding. Use plain, everyday language for your questions. Personalize your queries by mentioning specific needs or preferences, and follow up based on the responses you receive. Trust the process and don’t hesitate to ask any question, no matter how simple it may seem, to get clear, accurate, and relevant information.

Examples of RAG in LLMs

- Customer Support

- Example: A customer asks about the status of their order.

- RAG Application: In a customer support scenario, when a customer inquires about the status of their order, the RAG system first retrieves the latest order information from the company’s database, which might include shipping status, tracking number, and expected delivery date. The generative model then uses this retrieved data to craft a personalized response.

- Healthcare

- Example: A patient asks about the side effects of a newly prescribed medication.

- RAG Application: In the healthcare context, when a patient wants to know about the side effects of a new medication, the RAG system retrieves detailed information from medical databases, drug information leaflets, and clinical studies. It gathers data on common and rare side effects, interactions with other medications, and expert guidelines. The generative model then compiles this information into a clear and patient-friendly response.

- Legal Assistance

- Example: A user inquires about the legal implications of a contract clause.

- RAG Application: For a user seeking legal advice on a specific contract clause, the RAG system can retrieve relevant legal texts, precedents, and expert interpretations from legal databases and case law repositories. It analyzes the clause in the context of the retrieved information and generates a detailed explanation.

Weave now supports RAG

RAG models have demonstrated effectiveness in tasks like question answering, summarization, and dialogue generation by efficiently utilizing external knowledge sources while preserving the flexibility and creativity inherent in generative models.

RAG is now supported on Weave.

The RAG module in Weave works hand-in-hand with the Dataset module. After the large text files are uploaded via the Dataset module, the RAG module will split the text files into manageable chunks. As LLMs can’t handle large text files, this is a method to bypass that. In this way, the responses will be more relevant. Don’t hesitate to try Weave now!