Qwen 1.5: An Open-Source, Multilingual, High-Performance Language Model

- Jia Min Woon

- Weave

Figure 1: Qwen 1.5 | Image Source: QwenLM

What is Qwen 1.5?

Qwen 1.5 is the improved version of Qwen, a large language model (LLMs) designed for advanced natural language processing (NLP). This version is open-sourced and includes both base and chat models in sizes from 0.5 billion to 110 billion parameters, and a specialized mixture of experts (MoE) model. Qwen 1.5 also offers quantized models, including Int4 and Int8 GPTQ models as well as formats such as AWQ and GGUF. This variety ensures that developers can choose a model size and type suitable for their needs, whether for research, application development, or resource-limited environments.

How Does Qwen 1.5 Work?

Qwen 1.5 is built on the transformer architecture and integrated into Hugging Face’s transformers library, making it easy to load and use without custom code. It supports various deployment and fine-tuning frameworks like vLLM, SGLang, AutoGPTQ, Axolotl, and LLaMA-Factory. These models handle long-context understanding, supporting up to 32,768 tokens, and use techniques like Direct Policy Optimization (DPO) and Proximal Policy Optimization (PPO) to align responses with human preferences. This allows the model to avoid unwanted outputs and only gives relevant outputs. These features, along with strong multilingual capabilities and robust performance on various benchmarks, make Qwen 1.5 a versatile tool for different NLP tasks.

Why Should You Use Qwen 1.5?

Qwen 1.5 offers several benefits for everyone. As an open-source model, it provides transparency and flexibility for customization and further development. Its integration with Hugging Face transformers simplifies the process of using the models, and the support for multiple quantization formats ensures efficient performance in low-resource environments. The range of model sizes provides flexibility in balancing performance and computational cost. Additionally, Qwen 1.5 excels in benchmarks for language understanding, coding, reasoning, and multilingual tasks, making it a strong choice for both general-purpose and specialized applications. The improvements in alignment with human preferences ensure that the responses generated by Qwen 1.5 are more relevant and accurate, enhancing the user experience in chat and other interactive applications.

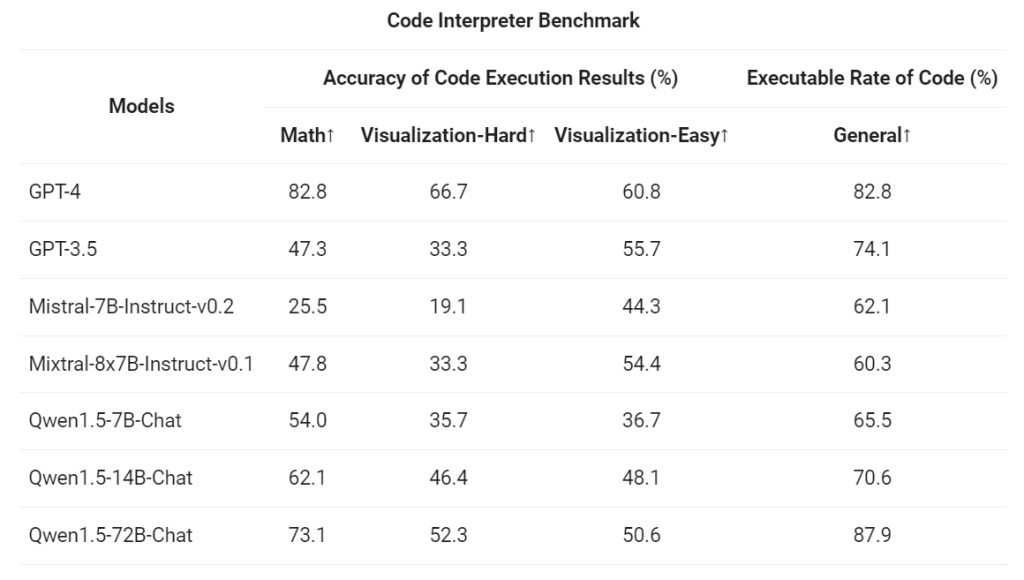

Figure 2: Code Interpreter Benchmark | Image Source: QwenLM

Based on Figure 2, Qwen 1.5 72B Chat has the highest executable rate of code among several language models. Executable code is also known as code coverage. The metrics for this are function coverage, statement coverage, branches coverage, condition coverage and others. With a high executable rate of code, there will be less bugs and errors in the generated code.

Why is it Better Than its Predecessors?

Qwen 1.5 outperforms the previous version and other competitive models in several areas:

- Enhanced Performance: Qwen 1.5 shows superior performance across benchmarks, with the 72B parameter model surpassing Llama2-70B in language understanding, reasoning, and math.

- Multilingual Capabilities: The model excels in multilingual tasks, covering 12 diverse languages effectively.

- Support for Long Contexts: With support for contexts up to 32K tokens, Qwen 1.5 meets the need for long-context understanding.

- Ease of Use: Integration with Hugging Face transformers and support for multiple frameworks and quantized models simplify development and deployment.

- Alignment with Human Preferences: Advanced alignment techniques help Qwen 1.5 generate responses that match human preferences, improving interaction quality.

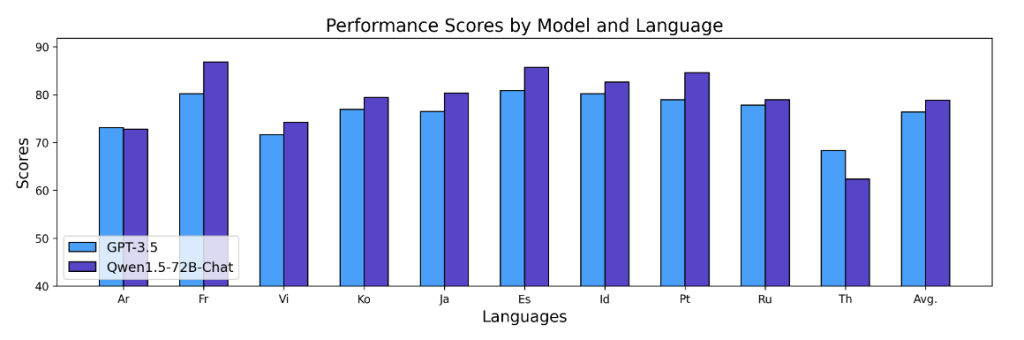

Figure 3: Language Performance Comparison | Image Source: QwenLM

Qwen 1.5’s language performance surpasses GPT 3.5’s on average. The languages include French, Vietnamese, Korean, Japanese, Spanish, Bahasa Indonesia, Portuguese, and Russian.

Qwen 1.5 is Supported on Weave Now

Qwen 1.5 is now supported on Weave, a platform designed to simplify the deployment and management of machine learning models. Weave’s user-friendly interface and deployment capabilities allow users to integrate Qwen 1.5 into their applications easily without technical expertise. This integration ensures that users can use the features of Qwen 1.5, such as its range of model sizes, multilingual capabilities, and open-source status, within Weave. Now, developers can quickly deploy Qwen 1.5 models, scale their applications, and ensure efficient performance, making it a good choice for various environments. Try out Qwen 1.5 now on Weave!